Content that

Recognized leader in enterprise AI content solutions

.avif)

Meet your AI content co-pilot

Conventional B2B content, trapped in outdated formats, gets locked out of modern digital channels and consumer habits

.avif)

.avif)

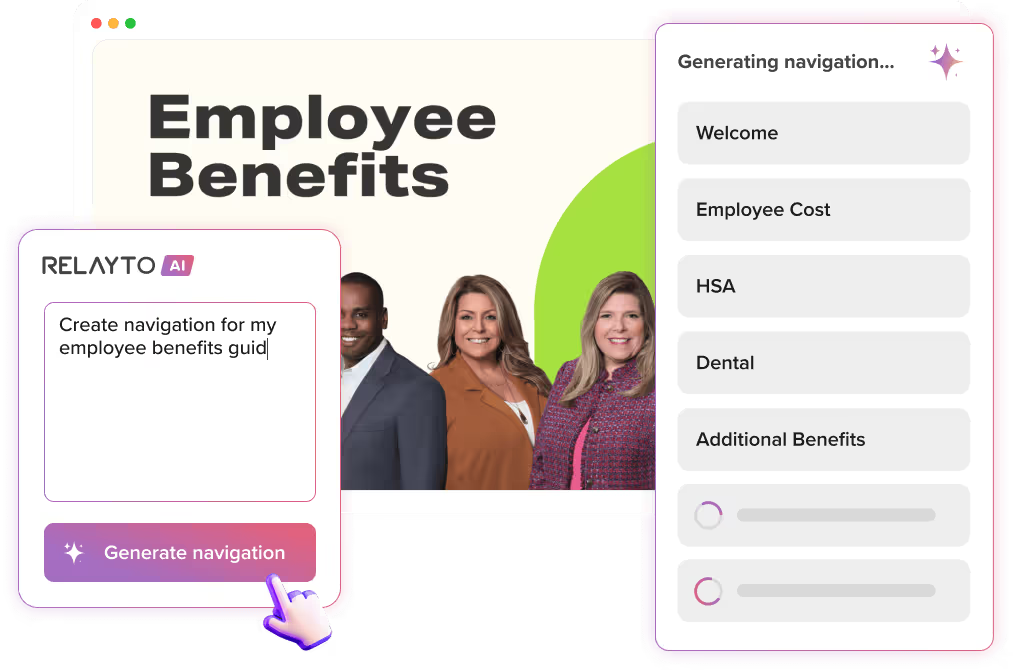

AI experience builder™

Drag. Drop. Done.

_74186341.avif)

.avif)

_73754160.avif)

_74186196.avif)

_73753547.avif)

_73753462.avif)

_73753937.avif)

One-click magic

Your content now talks back, securely

_73760588.avif)

.avif)

_74185188.avif)

We’ve got the answers

How is RELAYTO AI Content Chat different from ChatGPT?

RELAYTO AI Content Chatbot is designed specifically to provide information and answer questions related to the content of a particular PDF document or blog.

ChatGPT is a language model that uses internet text to generate human-like responses on a variety of topics, without being restricted to any particular content domain.

If my corporate policy prohibits ChatGPT, can I use RELAYTO AI Content Chat?

Unlike ChatGPT, RELAYTO AI Content Chat operates within a controlled environment where the content of the PDF document is the primary source of information. It does not generate responses based on external data sources or access to the internet. This controlled and contained nature may alleviate concerns related to compliance and policy restrictions.

What sources does Content Chat get information from?

When a particular document from a hub is opened, Content Chat will answer questions using the information given in documents from a hub. If a document is opened from the dashboard or via a direct link, only chat for that particular document will be shown. In the case of a hub, when a hub is opened, it will answer questions based on all given contents, including contents from subhubs if there are any.

What type of language model is used?

By default, we use OpenAI’s GPT-4 model to power the AI chat experience. However, this can be reconfigured to use our in-house deployment of LLaMA 4, which is currently in beta. Both models are large language models (LLMs) capable of advanced natural language understanding and generation.

How is the AI integrated within our platform?

The AI chat is tightly integrated into our platform via a retrieval-augmented generation (RAG) architecture. Here’s how it works:

1. Content from documents is processed and converted into embeddings, which are stored and indexed within our AWS-based infrastructure.

2. When a user asks a question, we perform a similarity search to identify the most relevant content chunks.

3. These relevant chunks are then passed along with the user’s question to the language model (e.g., GPT-4) to generate a grounded response that references specific content.

Is the model “closed,” and can it improve responses over time?

While the core language model (e.g., GPT-4) is not retrained on customer data, our system uses reinforcement mechanisms to improve performance over time.

This includes monitoring incorrect answers, collecting user feedback, and fine-tuning the retrieval process (e.g., improving document chunking and prompt engineering). These enhancements help ensure more accurate citations and responses without modifying the model itself.

Can we customize the initial three suggested questions?

Customization of the initial three prompt questions is on our roadmap. We plan to release this feature in early Q3 2025, which will allow tailored onboarding prompts based on the context of each experience.

Is my data safe?

Yes. We use industry-standard security protocols to protect your content end-to-end.

Transform complex documentation into compelling experiences

.avif)